Breast cancer is a serious disease with a wide array of treatment options depending on the severity of the cancer and other critical aspects. One of these critical aspects is metastasis (spread of the cancer). Metastasis can drastically affect the prognosis of the disease and treatment strategies used by medical professionals. One of the ways metastasis of breast cancer can be confirmed is by analyzing tissue biopsies taken from the sentinel lymph nodes. This is typically performed by a pathologist who will analyze high-resolution, whole-slide images (WSI) of stained lymph node tissue. While this method is reliable, there is also room for improvement due to the nature of this time consuming and tedious process. Slow turnaround times and small mistakes during this process have inspired the research community and Visikol to brainstorm new technologies that can aid a pathologist throughout this process to improve workflow and increase detection accuracy. Many of these technologies revolve around deep learning and computer vision.

In 2016, a very influential dataset known as the Camelyon dataset was released in hopes that new deep learning and computer vision technologies would be developed to help solve this important problem. It includes 1399 WSI’s of H&E stained sentinel lymph node tissue collected from five different medical centers in the Netherlands. In the dataset there are tissues with metastasis present and tissues without metastasis present. This dataset was accompanied by the Camelyon challenge, which was a competition to develop an automated algorithm to perform the following two tasks: 1) determine the probability that a lymph node tissue is cancerous, and 2) detect the cancerous regions in the lymph node tissue. We focus on task 2 in this project.

In order to detect the tumor regions in a WSI, we opt to utilize a convolutional neural network (CNN) to accompany the large amount of labeled data provided by the dataset. Since each WSI contained billions of pixels, we cannot train our neural network using the entire image. Therefore, we first split each WSI into many smaller image ‘patches’ beforehand. Using the image patches, we train our neural network on the training data and evaluate the performance on the test data using the classification metrics: accuracy, precision, recall, f1, and specificity. The test data is not seen by the neural network during training and thus is a good approximation of how ‘intelligent’ the neural network is when it comes to making predictions on new data it has never seen before. With a trained neural network, we can make predictions on a WSI to predict the tumor regions.

We used the MobileNetV2 CNN architecture for our neural network which is a parameter efficient architecture originally designed for mobile computing. This architecture was chosen because of its low number of trainable parameters and slightly higher frame rate compared to some of the other deeper architectures. This ensures fast inference time during whole-slide prediction and low memory utilization. A binary cross-entropy loss function is employed during training to update the parameters of the neural network. This loss function is designed for binary classification tasks to penalize false positive predictions and false negative predictions. During training, the parameters of the neural network are updated with each iteration with the overall goal of minimizing the value of the loss function.

After training the neural network, we evaluate the trained network on the test data that was not seen during training. We reach an accuracy of 89.2% on a test dataset of 32,768 images. After analyzing the other classification metrics and confusion metrics, we conclude that our network is slightly better at classifying healthy tissue with lower false positive rate (higher precision) compared to the false negative rate.

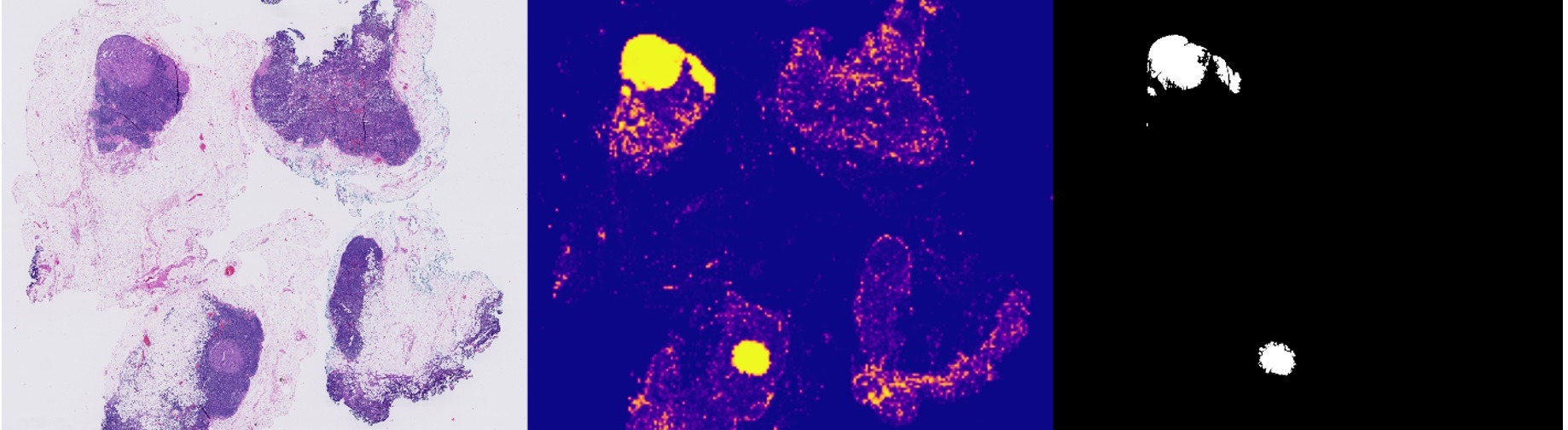

To make a prediction on a WSI, we first break up the WSI into many image patches in the same fashion we did for training the neural network since our neural network expects a small input size. Then we make a prediction on all of the image patches extracted from the WSI and reorganize the predictions into the same shape as the original WSI. This results in tumor probability map where each pixel is now a probability score between 0 and 1 instead of an RGB pixel value. Based on the two WSI test cases shown in the accompanied presentation, we conclude that our trained neural network can accurately detect tumor regions in a WSI of lymph node tissue.

In this project, we explore a novel technology that can hopefully one day improve the overall care for people with breast cancer. We implement a convolutional neural network to automatically detect tumor regions in whole-slide images of sentinel lymph node tissue and present our results on several test cases. This project is one of the many ways Visikol is committed to contributing to the pathology community. Please reach out with any questions and concerns related to your digital pathology projects.

To check out the PyTorch library for breast cancer metastasis detection in whole-slide images of sentinel lymph node tissue from the Camelyon dataset click the GitHub link below: