As a leading provider of digital pathology services and solutions, we have worked with dozens of pharmaceutical companies in helping them transform their qualitative human-driven histopathology workflows into quantitative computer-based applications. While much of this work tends to be in the preclinical space, we are one of the few companies to have developed clinical machine learning digital pathology tools which have been deployed in the clinic such as our recent work with Enzyvant Therapeutics on RVT-802. The fascinating thing about the digital pathology space is that while companies like PathAI, Proscia and PaigeAI are raising tens of millions of dollars in VC funding to accelerate the development of technologies in this field, the underlying digital pathology and AI tools required to address clinical short-comings have been around for over twenty years (remember PAPNET?). The problems with adopting these paradigm shifting technologies in the clinic tend not to be technical problems but instead business model, go-to-market, validation and ultimately problems with not providing clear clinical or financial value compared to the current gold-standard treatment regimen. For example, what benefit does Google’s AI breast cancer identification platform provide if being 5% more accurate does not change how patients are treated or subsequently their outcomes? Furthermore, if such a system is a black box based upon neural networks how can we be certain that it will not at some point in time introduce an unexpected false negative or positive result?

In this blog post, we will focus primarily on the problem of validation which is often overlooked but crucial to the introduction of a digital pathology tool into the clinic.

The basic premise of validating a digital pathology tool for use in the clinic is quite the same as validating any assay such as an HPLC assay where the range, precision, robustness, specificity and accuracy of the tool need to be validated prior to routine use. At Visikol, when we develop a digital pathology tool for a Client we always build these ICH analytical assay validation parameters into the IQ/OQ/PQ process for applications in order to demonstrate its performance prior to use.

Specificity

Digital pathology assays are typically designed for a specific use case such as for use with melanoma and more specifically melanoma sentinel lymph node biopsies wherein the assay is not appropriate for any other type of tissue. Because digital pathology tools employing machine learning are typically based upon a training library of data, it is crucial that the input into the application is specific to the use case or otherwise their might be unforeseen outcomes. For example, if a melanoma primary tumor was used instead of a sentinel lymph node then the results provided from the application are not going to be valid and can provide misleading information. We find that typically it is challenging to programmatically define specificity as the application would need to have the ability to discriminate amongst a multitude of tissue types. Therefore, typically in our assays specificity (i.e. the tissue going into the system) is controlled for by a pathologist and standard operating procedures.

Range and Robustness

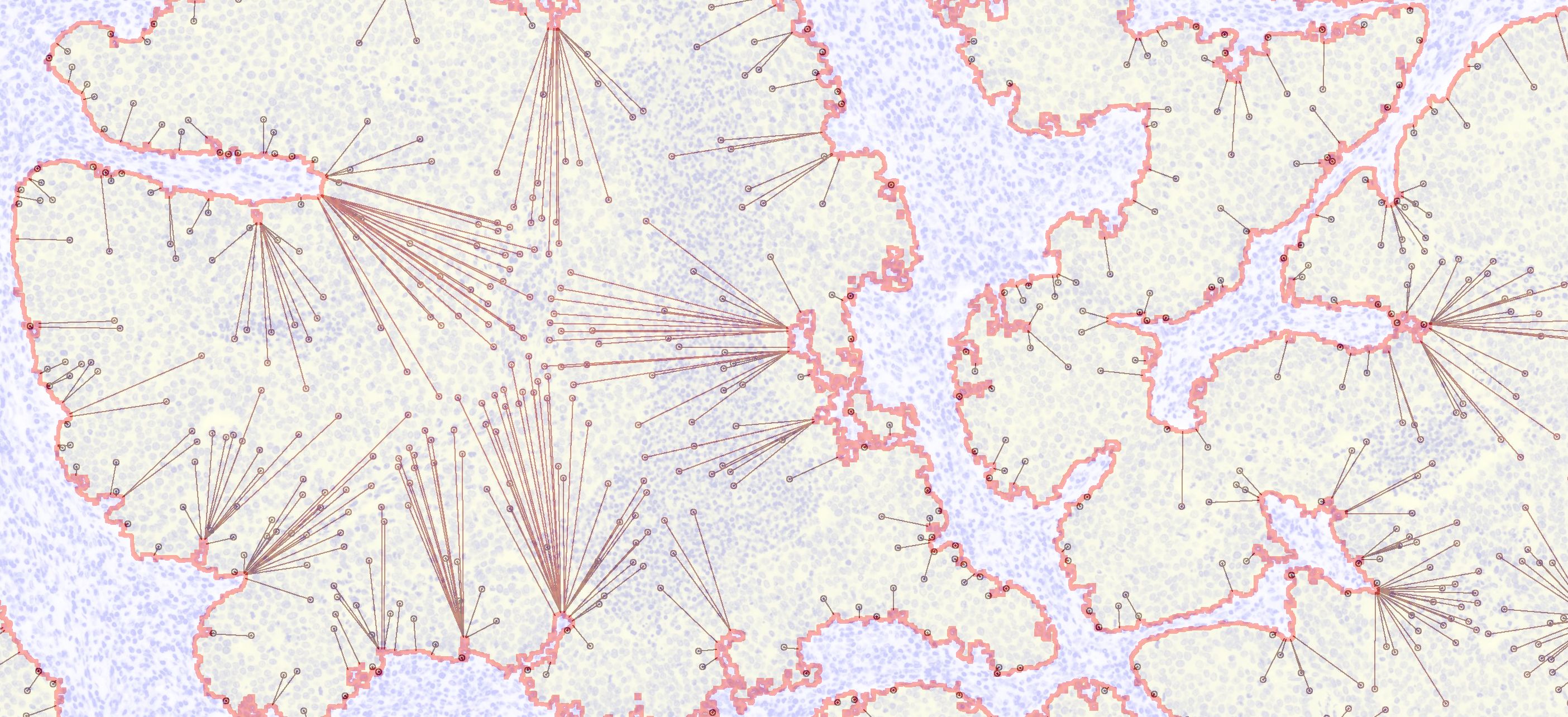

When looking at H&E or IHC images, what you will notice is that there is a large degree of variation between slides of the same tissues and diseases prepared by different technicians at different periods in time. This tends to make digital pathology challenging as it becomes uncertain whether slides are being characterized based upon their underlying features or instead artifacts of varied staining. Therefore, a crucial component to developing a digital pathology application is to define the parameters that effect the underlying image analysis algorithms used in the application and defining the acceptable ranges for each parameter. For example, in most digital pathology applications, there is an acceptable range for how much tissue needs to be on a slide for acceptable analysis and there is also an acceptable range for staining intensity which we often refer to as tissue entropy. If there is too little tissue on a slide, most image analysis algorithms will have challenges with cell segmentation and if staining is too dark or too light the same problems can crop up. For all of our assays, we evaluate each one of these parameters and quantitatively define acceptable ranges for each parameter such that before we analyze a slide, we run the slide through a set of system suitable criteria (e.g. correct metadata, correct file type) to determine if the slide meets the defined acceptable ranges for the assay. This has the effect of throwing out slides that are unacceptable for analysis.

Accuracy and Precision

Typically when we develop a digital pathology assay, there are several types of image analysis and data analysis components to the assay which all need to be validated individually. For example, a machine learning application might have three separate major operations such as 1) build a training library, 2) evaluate an unknown sample and 3) classify unknown sample based upon training library. In this case we would need to develop a validation plan for each one of these operations where we would start with validating the development of the training library. In this case, we would determine quantitatively what values would be generated for each image within the training library such as the average nuclei size for a given image. With this expected data, we could then run the operation of generating the training library multiple times and demonstrate that the assay’s generated values match the expected values every single time the assay is run.

Once we get to the second operation of the assay, we typically will validate the analysis of an unknown sample by generating many samples with known features. For example, we might generate images with circles or squares of known dimensions and then demonstrate in a reproducible manner that the assay outputs the expected values for these images. Lastly, we will then input images with known outcomes into the assay and demonstrate in a reproducible manner that these images are classified correctly.

Biased vs Unbiased Machine Learning

A question that we commonly receive from researchers is whether we use a biased or unbiased framework for our analysis. Our use of human-based training depends a lot on the nature of the project but generally we see a lot of challenges with deploying human-based training applications (i.e. neural networks or AI) in the clinic because of validation. It is nearly impossible to understand why a neural network made the decision that it made and thus validating these systems is problematic because their decisions cannot be deterministically defined. This leaves a lot of uncertainly in regard to what parameters change these decisions and if strange combinations of factors (i.e. staining intensity, tissue size) might adversely effect the outcome of the assay. Therefore, while these approaches might perform better than humans at classifying tissues from specific data sets, it is unknown whether this will be true for all data sets.